Machine translation has become a very common thing nowadays and many people use it on a daily basis. The increasing quality of online services like Google Translate makes them applicable not only for personal use but even for professional translators.

However, it hasn’t been for more than 5 past years since automatic translators became this good - most of us remember how they used to struggle with anything larger than a few-word phrase. So, how did that machine translation breakthrough happen? Let’s find out.

The Dawn of Machine Translation

The term “machine translation” (MT) refers to a process when one natural language is translated into another by means of special software. Such software can be installed directly on a computer (or mobile device) or be available via the Internet.

The idea of using a computing device for linguistic translation first appeared in 1947. But the implementation of such an idea was simply impossible at that time given that the computer technology was in its infancy.

However, in 1954, there was the first attempt at machine translation. The very first dictionary included only 250 words, and the grammar was limited to 6 rules. Nevertheless, this was enough for linguists and engineers to be convinced of the great future of machine translation. Active work in this direction began in many countries and the first machine translation systems and specialized MT theories began to appear.

Essential Machine Translation Methods

What is the easiest translation method that comes to mind? To use a dictionary - that is, to replace all words in the sentence with their equivalents in another language. However, this approach has an obvious drawback: the order of words in different languages can be different, and sometimes quite dramatically.

The first improvement to this basic model was to allow word permutations. How can these permutations be predicted? In addition to a dictionary, there is a so-called alignment model that defines the correlation of words in two sentences with each other. Alignment is based on a large amount of statistics.

One disadvantage of this improved method is that it takes a lot of effort to prepare the data based on which the alignment is done - not only professional translators must translate the text but also indicate which word is the translation of which one. This implies lots of work and lots of computing power, which makes development of machine translation systems quite an expensive endeavour that only large companies can afford.

Some popular modern examples include systems like Google Translator and Microsoft’s Bing Translator.

Further Advancement with Neural Networks

In 2014, there was an article published by Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio entitled Neural Machine Translation by Jointly Learning to Align and Translate. In this work, Dzmitry proposed building a single neural network that can be jointly tuned to maximize the translation performance.

The authors were able to achieve significant results in machine translation with the help of a neural network. The results of the research obtained by Neural Machine Translation set a new benchmark for decoding a neural network for linguistic applications. It looked like the network could actually “understand” the sentence when translating it.

Attention Mechanism

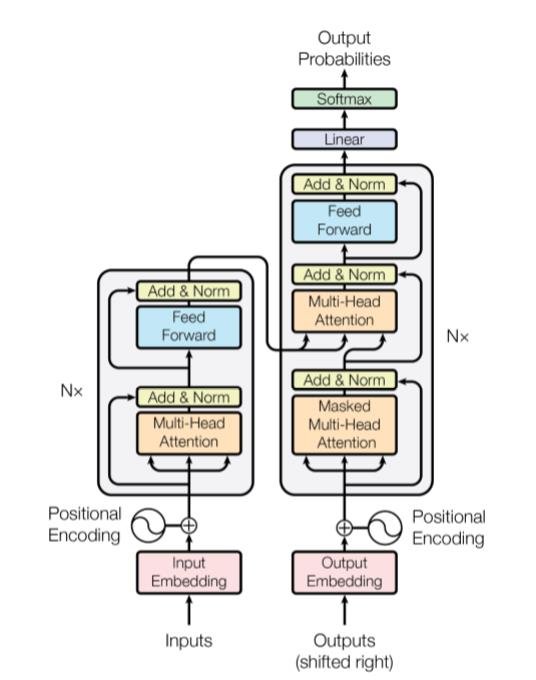

The “Attention is All You Need” work by Ashish Vaswani, Noam Shazeer and others marks the second advent of fully meshed networks into the NLP arena. Its authors, who worked in Google Barin and Google Research, introduced a new simple network architecture, the Transformer, based solely on attention mechanisms.

The Transformer model from “Attention is all you need”

The idea behind the attention mechanism is that we have to focus on some relevant input to the encoder in order to perform better decoding. At its simplest, relevance is defined as the similarity of each input to the current output (See the diagram). This similarity is defined in turn as the sum of the inputs with weights, where the weights are added to 1, and the highest weight corresponds to the most relevant input.

In contrast to the classical approach, the authors of this work proposed the so-called self-attention on the input data. The word “self” in this case means that attention is applied to the same data on which it is calculated. At the same time, in the classical approach, attention is calculated by some additional input relative to the data to which it is applied.

Stop Paralleling

Another interesting approach to the development of machine translation is machine translation without using parallel data. It was made possible by the above-mentioned use of neural networks,which enabled scientists to abandon the alignment markup in translated texts for training the machine translation model. Based on this model, the authors of “Unsupervised Machine Translation Using Monolingual Corpora Only” presented a system that was able to translate from English into French with only 10% lower quality compared to the best and most powerful MT solutions of the time. It is interesting to note, the same authors further refined their approach by using phrasal translation ideas later that year.

Non-Autoregressive Models

Finally, there’s another promising approach to MT, the so-called non-autoregressive translation. What is it? All the models mentioned before actively used previously translated words for new translations. The authors of the paper titled “Non-Autoregressive Neural Machine Translation” tried to get rid of this dependence. The quality of translation becomes slightly lower but the speed can be ten times faster than for autoregressive models. Considering that modern models can be very large and unwieldy, this is a significant gain that can make a crucial difference for some applications.