Google Cloud Data Fusion is a fully managed cloud service from Google that provides a sleek graphical user interface for building data pipelines, starting from data ingestion from all kinds of sources to applying data transformations to data load into warehousing and BI solutions. It greatly simplifies individual data engineering tasks as well as allows creating reusable data pipelines across the entire organization. Based on the open-source CDAP framework, Cloud Data Fusion takes its usability to a new level by being fully integrated and supported by Google as part of the Google Cloud Platform (GCP).

The concept of a digital enterprise has evolved significantly over the years. First used to describe any business that takes advantage of digital technology, it is now also commonly associated with the use of technologies for automated data collection, data analytics, and data-driven decision making. And there’s no surprise that Google, which algorithms crawl and analyze millions of websites on a daily basis, also gains leadership in the enterprise data management field. Their recent product called Cloud Data Fusion can give them an even stronger competitive edge.

In a nutshell, Cloud Data Fusion allows users to quickly build and manage ETL/ELT data pipelines. The best part is that instead of having to write tons of code to connect a data source to a warehouse, data specialists can use a convenient graphical interface to build necessary pipelines in a drag and drop manner. In this way, it all helps them focus on actual data analytics and deriving insights for better customer service and operational efficiency.

1. Data transformation with Google Cloud Data Fusion

WHAT IS A DATA PIPELINE AND HOW CAN GOOGLE CDF HELP?

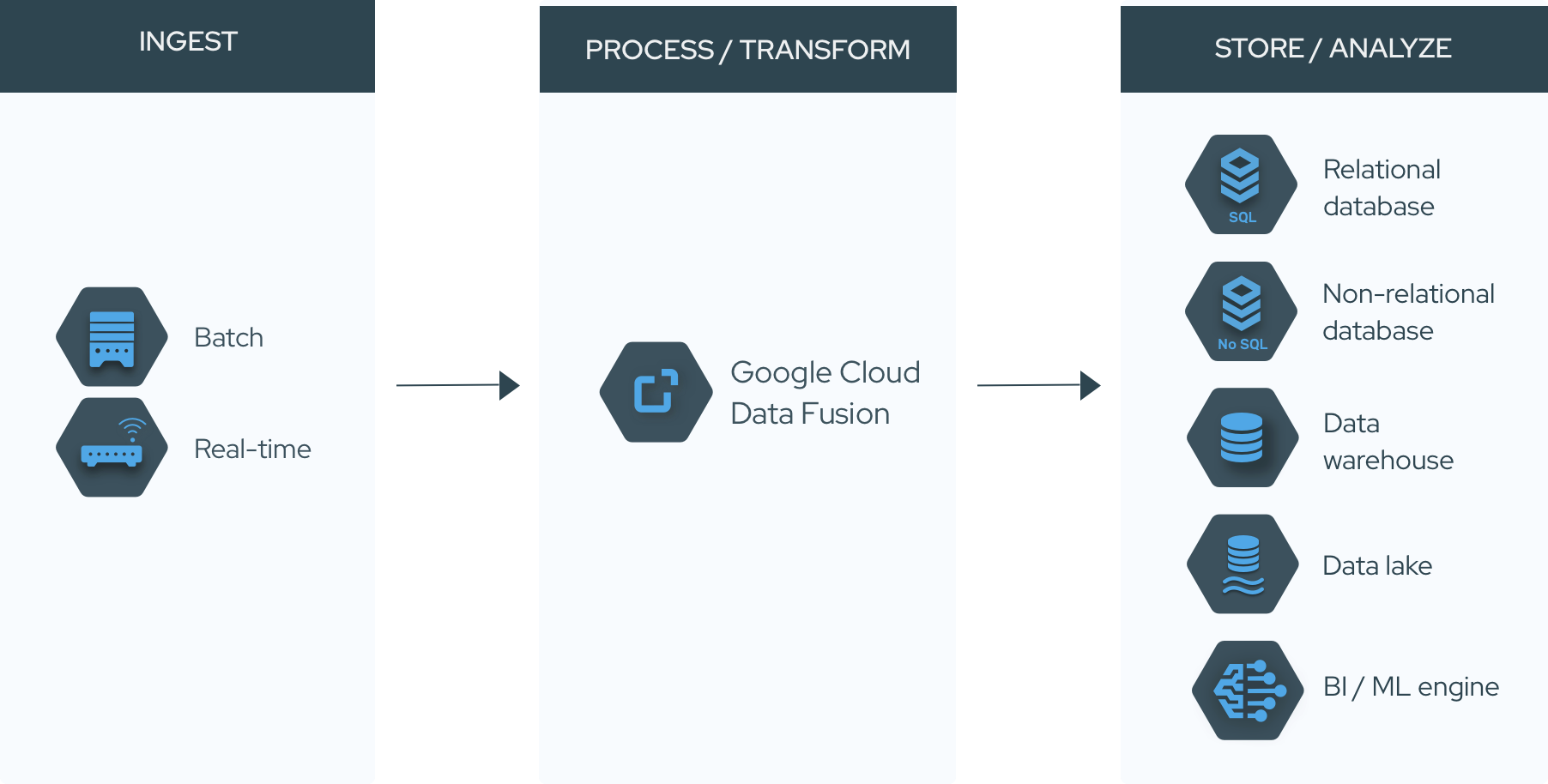

A data pipeline is a data engineering software solution that transports data from its original sources to another cloud-based or on-premise system, data warehouse, or data lake, enriching and cleansing it as necessary along the way. A data pipeline enables you to pull data from any number of sources thus taking care of data integration before any data analytics tools can be applied. These data sources can be anything from a cloud storage to application logs to IoT device telemetry to online transactions to social media posts. Integrating diverse data into a single pool can help you go beyond the “tunnel vision” analytics to more comprehensive view of your business performance.

Typically, a data engineer would have to create a specific connector for a new data source to add it to a pipeline. But with the tool like Google Cloud Data Fusion, which is based on an open-source CDAP framework, you can take advantage of a broad open source library of preconfigured connectors to get the job done. It also provides a set of popular transformations, which you can use to cleanse and unify diverse data in your pipeline. These two features alone can save you tons of coding.

But what makes Google CDF a real killer is, of course, its drag and drop interface for building data pipelines. Code-free deployment of data pipelines means that even less technical data analysts or managers can take care of what used to be a complex data engineering task, and do it real fast. Or it can streamline your data engineers’ daily routines so that they could focus more on building data applications rather than data ingest and preparation.

Finally, thanks to its open-source CDAP core, Google CDF offers unlimited integration options with on-premises or public cloud platforms for data warehousing and analytics. This makes for the final step in building a typical ETL data pipeline (extract-transform-load).

GOOGLE CLOUD DATA FUSION FEATURES AT A GLANCE

Here’s a quick recap of Cloud Data Fusion main functionality and benefits:

- Code-free design and deployment of data pipelines – enables data engineers as well as non-technical users to quickly set up and configure data pipelines via an intuitive graphical interface.

- Extensive libraries of connectors and transformations – ready-to-go connectors for modern applications and online services to quickly set up data ingestion for your data pipeline, both in a batch mode and real-time streaming; preconfigured code-free transformations for data standardization and unification.

- Freedom of deployment infrastructure – based on the open-source CDAP framework, Google CDF offers all the flexibility you may need to build data applications across private, hybrid, or multi-cloud environments

- Enterprise-grade collaboration, security, and performance – collaborative features to enable sharing and validation of custom connectors and transformation, integrated cloud identity and access management for data protection, performance tracking and fault prevention tools.

- Straightforward integration with GCP and related solutions – Google CDF allows engineers to easily utilize numerous other data engineering/analytics solutions and technologies that are available on Google Cloud Platform.

You can see what it has to offer for yourself. See this Quickstart guide to get started.

CDF WITHIN THE FAMILY OF GOOGLE DATA ANALYTICS PRODUCTS

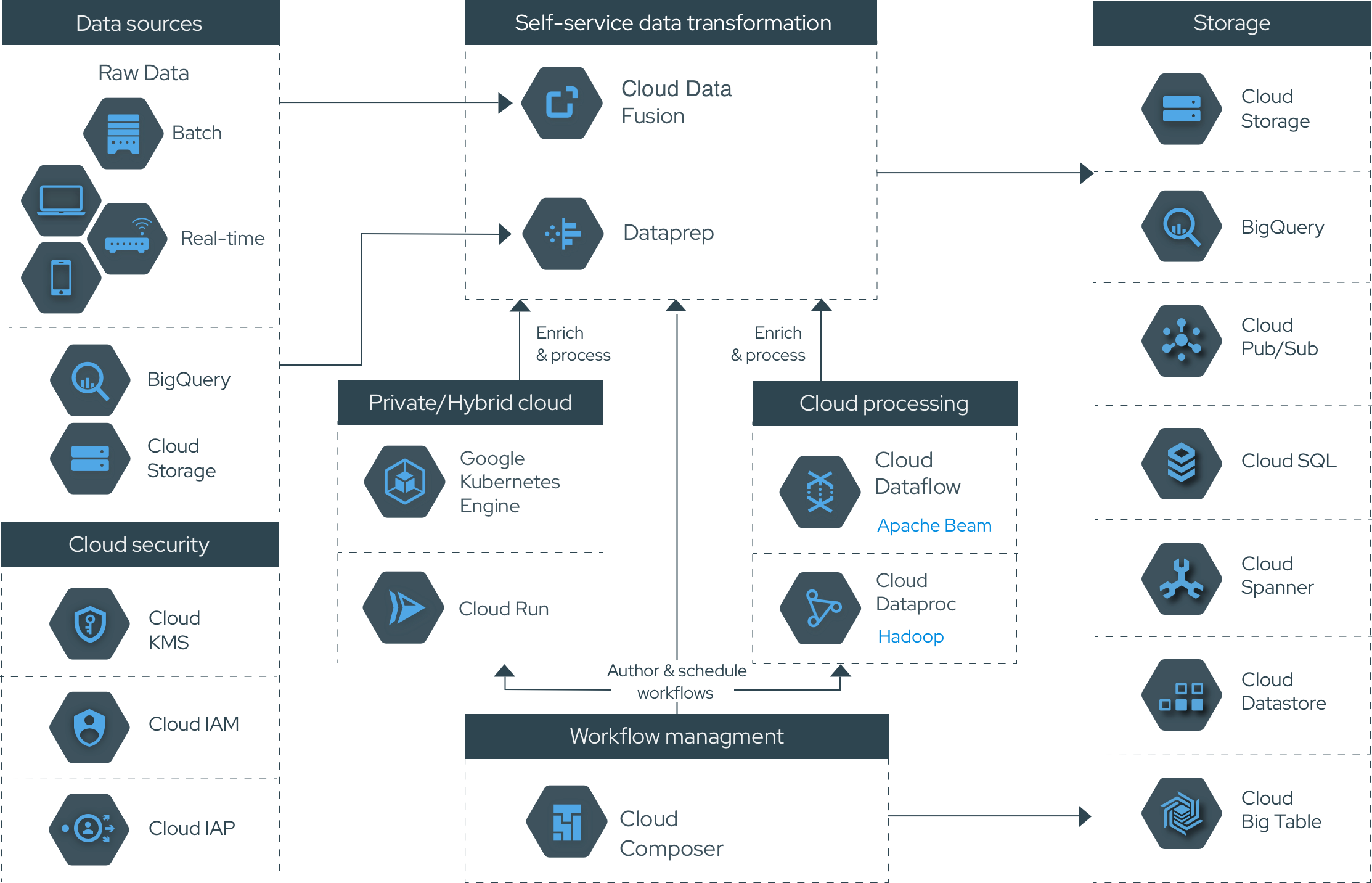

Cloud Data Fusion is the central part of the rapidly evolving Google’s data analytics ecosystem for enterprise users. It basically connects all your possible data sources to Google cloud storage, BI, event & messaging, and data visualization tools while offering exceptional self-service capabilities.

2. Cloud Data Fusion within the family of Google Data Analytics Products

Cloud Data Fusion is set to appeal specifically for business users and corporate data science departments. Even though you could build ETL pipelines by using Goggle’s Dataproc and Dataflow solutions alone, it’s just so much easier with Cloud Data Fusion. And compared to Dataprep, which also provides a graphical UI to build data pipelines, CDF is way more flexible: it can connect with a broad variety of data sources, utilizes CDAP libraries of preconfigured connectors and transformations, and offers freedom of deployment based on open source.

ENTERPRISE USE CASES FOR GOOGLE CDF

Using ETL/ELT data pipelines is a part of modern Big Data analytics and a great number of companies are already on board with this technology. According to the study by Dresner Advisory Services, Big Data adoption in enterprises soared from 17% in 2015 to 59% in 2018, reaching a Compound Annual Growth Rate (CAGR) of 36%.Industries like Financial Services, Insurance, and Advertising have already surpassed 70% in Big Data adoption numbers while Telecom marked a staggering 95%.

The same study demonstrates that Data Warehouse Optimization, Forecasting and Customer/Social Analysis are the three most critical big data analytics use cases in enterprises today. The IoT is also among top 7. As companies across all major industries have gained access to new sources of data, they now try to understand how to make the most sense out of it. Therefore, forecasting is the most common business application of Big Data analytics, commonly found in financial and insurance sectors. In SaaS, IT, and Telecom worlds, the spotlight is on customer analysis and understanding how to improve user engagement. In manufacturing, predictive maintenance is what brings the biggest value.

For all these use cases, companies want to see how they can maximize ROI and drive down operational cost and risk. That’s why efficient data warehousing remains a key priority. From this perspective, the introduction of Cloud Data Fusion is a clear move on the part of Google to streamline data engineering tasks for enterprise users. Being one of the most popular cloud infrastructure providers and with its expanding set of data analytics products, Google aims to offer an end-to-end ecosystem of tools for modern data-driven companies. And so far it’s shaping up very well.

GOOGLE CDF FOR TYPICAL DATA ENGINEERING TASKS

Here’s how Google CDF will help your data engineers in their daily chores.

Data Warehouse Management

For a modern data warehouse, data may be collected from dozens and hundreds of different types of sources. CDF allows connecting to such sources and ingesting data adhoc with no or minimal need for coding.

Data Consolidation

CDF can join data from several sources storing data in different formats and perform analytics based on such combined data.

Data Migration

As a common use case, Data Fusion enables you to easily transfer data from legacy mainframes to cloud as a single batch execution, scheduled incremental executions, or in real time. It also allows you to implement and upload custom connectors for any source or sink, including legacy mainframes.

Master Data Management

Data Fusion can act as a data hub between the supplier’s and customer’s data storages, synchronizing necessary data according to predefined business rules.

Data Consistency

Data consistency is ensured through reconciliation or comparing the same data stored in different data locations. This is usually required for compliance purposes or to verify the quality of data transmitted between different corporate systems.

GOOGLE CDF SUPPORT SERVICES FROM CYBERVISION

To help organizations succeed with Google Cloud Platform and its Data Analytics products, CyberVision provides expert software engineering, consulting, and system support services. Our Google certified engineers can help you implement new Google products into your application stack as well as migrate your solutions to Google Cloud.

Despite its recent launch, we have already implemented Cloud Data Fusion for some of our clients using Google Cloud Platform. In one case, we have successfully carried out migration to GCP for a major Telecom operator and built requested data pipelines on top of Cloud Data Fusion. Our secret is that we’d been working with CDAP, an open-source framework behind CDF, long before Google made it into a commercial product. We also offer full-cycle CDAP support services.

If you want to learn more about our services in reference to Google Cloud Platform, Cloud Data Fusion, or CDAP, don’t hesitate to contact us.