Less than a year ago, in May 2018, Cask Data, a Palo Alto startup specialized in big data analytics, joined the Google Cloud. Today we would like to congratulate our friends at Cask with a launch of their flagship product, CDAP, on the Google Cloud Platform as Cloud Data Fusion.

CDAP (Cask Data Application Platform) is an open-source, Apache 2.0 licensed framework for building data transformation and analytic applications. Being in production use for over 5 years, CDAP runs on top of a variety of Hadoop distributions, including the Hortonworks® Data Platform, MapR Converged Data Platform, and Cloudera Enterprise Data Hub. CDAP code-free, visual interface for building data processing pipelines offers a smooth learning curve for users with a varying level of previous exposure to the big data analytics.

The CDF is fully managed and runs its data processing pipelines on Google Cloud Dataproc, thus requiring no infrastructure management. You can choose to run pipelines as MapReduce, Spark, or Spark Streaming engines. The open-source version of CDAP at its core, CDF enables simple pipelines portability from on-premise installations.

CDF is a valuable addition to the Google Cloud Platform. Previously, data transformation and processing with Dataflow or Dataproc required extensive coding skills. Cloud Data Fusion takes a step forward by providing a fully integrated and intuitive visual interface that greatly simplifies these tasks.

Cloud Data Fusion became publicly available this week during the Google Cloud Next’19 event. At the end of March 2019, CDAP team conducted a Cloud Data Fusion training for partners, and CyberVision team had the privilege of getting the first glimpse at the Cloud Data Fusion, as well as try it out in action

Cloud Data Fusion offers an extensive set of data aggregation and analysis tools in a single package. The functionality of these tools is central to some of Cybervision’s ongoing projects.

CDF pipelines can be either batch or real-time. Batch applications can be run manually, based on a time schedule, or another trigger. Real-time applications run continuously, obtain data as it becomes available, and process it immediately. This flexibility is important for efficiently handling tasks of various nature.

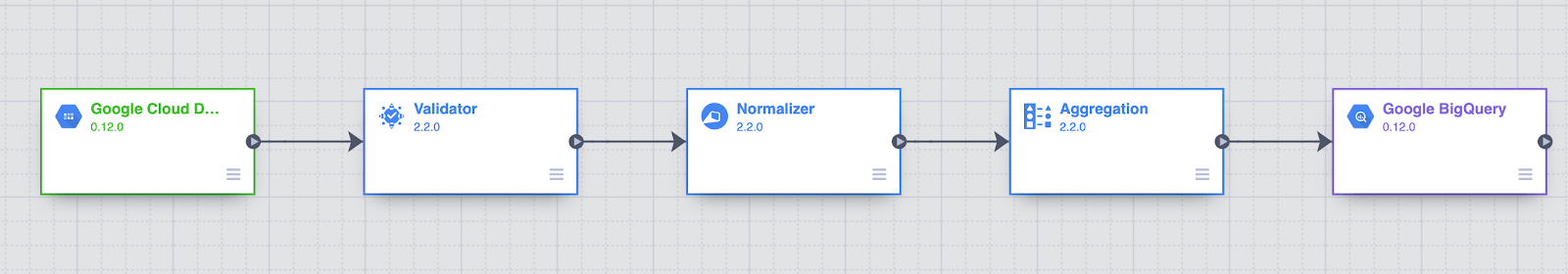

Let’s take a look at an example CDF pipeline. Assume that raw, semi-structured medical data is loaded into Cloud Datastore. Our goal is to validate data ranges and dates, normalize the records by renaming certain fields and aggregate them by patient ID and date. In the end, the aggregate data is loaded into BigQuery so it can be used by analytics tools for further exploration and analysis. CDF comes ready with tools to do the necessary data manipulations

Cloud Data Fusion enables users to build pipelines to ingest data with a BigQuery Plugin, thus making high-volume data ingestion faster and easier. Harnessing the full power of Google’s infrastructure, this plugin is immensely helpful in querying massive datasets by enabling fast SQL queries against append-only tables. Users can transfer their data to BigQuery and process it further in CDF, by using an import query or input table available for download to temporary Google Cloud Storage directory and finally to the CDF source.

Google Cloud Datastore is a NoSQL document database powered by Google Cloud Platform and designed for automatic scaling, high performance, and easy development of web and mobile applications. Based on Google’s Bigtable and Megastore technology stack, Google Cloud Datastore is set to handle large analytical and operational workloads and provides access to data via a RESTful interface.

Google Cloud Datastore source/sink allows users to build multiple data pipelines in Cloud Data Fusion at once, enabling them to read complete tables from Google Cloud Datastore instance or to perform inserts/upserts into Google Cloud Datastore tables in batch. During configuring the Google Cloud Datastore the purpose of each field is to define entity properties via unique keys, which makes the processing of multiple data types possible.